Sensor data formats

This section describes the different data types and formats each sensor model uses when saving data. Refer to the page on how to capture sensor data for instructions on saving sensor model output data.

Lidar point cloud data

File format:

.bin

Lidar point cloud data is saved in a 1-D array as a .bin file. Each point in the point cloud consists of its coordinate in 3D space (x, y, and z coordinates), and intensity as float values. The origin is set to the point where the lidar is mounted.

For Python users, the point cloud can be loaded by using the NumPy np.fromfile function.

Files are saved in SaveFile/SensorData/LIDAR_{id}. File names are automatically generated as the time and date at which the point cloud data was saved.

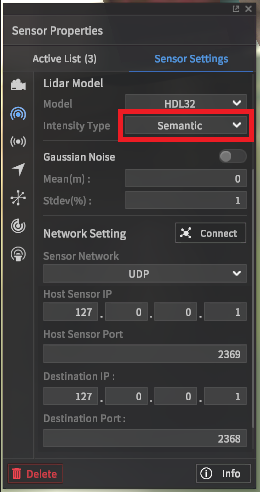

Semantic segmentation

Setting the Intensity Type option to semantic labels the point intensities with different color values depending on the type of object. This feature is used to create pre-labeled lidar pcd datasets. By default, semantic segmentation values follow the classification table below.

Class | Intensity (unsigned int) |

|---|---|

Asphalt | 127 |

Building | 153 |

Traffic Light | 190 |

White Lane | 255 |

Yellow Lane | 170 |

Blue Lane | 144 |

Road Sign | 127 |

Traffic Sign | 132 |

Crosswalk | 136 |

Stop Line | 85 |

Sidewalk | 129 |

Road Edge | 178 |

Standing OBJ | 109 |

Object On Road | 92 |

Vehicle | 86 |

Pedestrian | 118 |

Obstacle | 164 |

StopLinePrefabs | 92 |

Light | 94 |

Obstacle1 | 67 |

Obstacle2 | 101 |

Obstacle3 | 101 |

Obstacle4 | 67 |

Obstacle5 | 101 |

Sedan | 125 |

SUV | 135 |

Truck | 145 |

Bus | 155 |

Van | 165 |

Stroller | 40 |

Stroller_person | 50 |

ElectronicScooter | 60 |

ElectronicScooter_Person | 70 |

Bicycle | 80 |

Bicycle_Person | 90 |

Motorbike | 100 |

Motorbike_Person | 110 |

Sportbike | 120 |

Sportbike_Person | 130 |

Instance segmentation

Instance segmentation works in the same method as semantic segmentation, but labels every individual object in the scene by each instance instead of by type. Intensity values are assigned based on the table below.

Class | Intensity (unsigned int) |

|---|---|

Vehicle | 0 ~ 149 |

Pedestrian | 150 ~ 254. |

Obstacle | Random (50, 100, 150, 200, 250, 45, 90, …) |

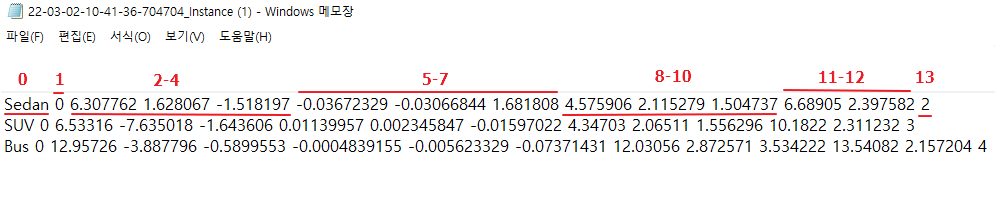

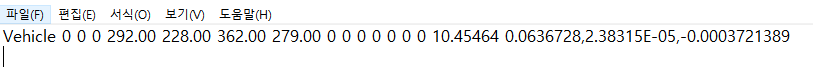

3D bounding box

From version 22.R2.0, all 3D rotation angles of 3D Bounding Box are provided to improve the cognitive performance of AV (Autonomous Vehicle). Roll, Pitch and Yaw are added to 3D Bounding Box file (.txt) except Yaw.

File format:

.txt

3D bounding boxes (or bbox for short) are boxes in three-dimensional space that delineate the region in which an object is located. 3D bbox data is saved in a simple text file following the following specification.

0: class name of 3d bbox

Vehicle, Pedestrian, Object

1 : class id of 3d bbox

Vehicle : 0

Pedestrian : 1

Object : 2

2-4 : center_x, center_y, center_z of 3d bbox (unit : m)

The origin of the object coordinate system calculated based on the LiDAR coordinate system.

The LiDAR coordinate system follows ISO 8855 convention, which is x-axis : forward, y-axis : left, z-axis : up.

5-7 : roll (x-axis), pitch (y-axis), yaw (z-axis) of 3D BBox (unit : radian)

The rotation angle of the object coordinate system calculated based on the LiDAR coordinate system.

8-10: size_x (x-axis), size_y (y-axis), size_z (z-axis) (unit : m)

11-12 : Relative distance and relative speed (unit: m, m/s)

13: Unique ID of the detected object

Files are saved in SaveFile/SensorData/LIDAR_{id}. File names are automatically generated as the time and date at which the point cloud data was saved.

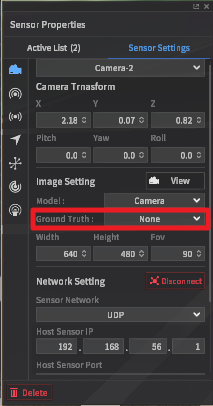

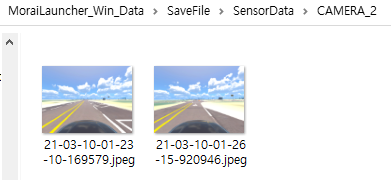

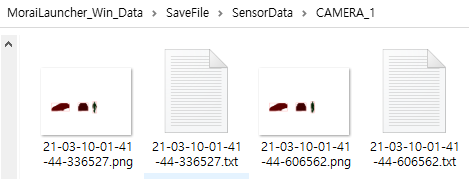

RGB camera image

File format:

.jpeg

To save as RGB format, after camera installation, select ‘None’ from Ground Truth field from Image View section in Camera Setting panel.

File is saved in SaveFile/SensorData/CAMERA_*. The file name is automatically generated based on point time and date at which point cloud is saved.

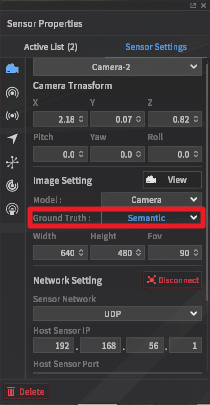

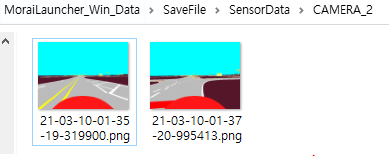

Semantic segmentation image

File format:

.png

To save as semantic label images, after camera installation, select ‘Semantic’ from Ground Truth field from Image View section in Camera Setting panel.

RBG values of segmentation image labelling map are as follows.

Class | R | G | B |

|---|---|---|---|

Sky | 0 | 255 | 255 |

ETC | 85 | 22 | 42 |

Asphalt | 127 | 127 | 127 |

Building | 153 | 255 | 51 |

Traffic Light | 255 | 74 | 240 |

White Lane | 255 | 255 | 255 |

Yellow Lane | 255 | 255 | 0 |

Blue Lane | 0 | 178 | 255 |

Road Sign | 204 | 127 | 51 |

Traffic Sign | 99 | 48 | 250 |

Crosswalk | 76 | 255 | 76 |

Stop Line | 255 | 0 | 0 |

Sidewalk | 255 | 102 | 30 |

Road Edge | 178 | 178 | 178 |

Standing OBJ | 113 | 178 | 37 |

Object On Road | 178 | 9 | 90 |

Vehicle | 255 | 2 | 2 |

Pedestrian | 98 | 2 | 255 |

Obstacle | 236 | 255 | 2 |

StopLinePrefabs | 255 | 22 | 0 |

Light | 255 | 2 | 25 |

Obstacle1 | 100 | 100 | 2 |

Obstacle2 | 200 | 100 | 2 |

Obstacle3 | 100 | 200 | 2 |

Obstacle4 | 2 | 100 | 100 |

Obstacle5 | 2 | 200 | 100 |

Sedan | 255 | 60 | 60 |

SUV | 255 | 75 | 75 |

Truck | 255 | 90 | 90 |

Bus | 255 | 105 | 105 |

Van | 255 | 120 | 120 |

Stroller | 120 | 0 | 0 |

Stroller_person | 120 | 15 | 15 |

ElectronicScooter | 140 | 20 | 20 |

ElectronicScooter_Person | 140 | 35 | 35 |

Bicycle | 160 | 40 | 40 |

Bicycle_Person | 160 | 55 | 55 |

Motorbike | 180 | 60 | 60 |

Motorbike_Person | 180 | 75 | 75 |

Sportbike | 200 | 80 | 80 |

Sportbike_Person | 200 | 95 | 95 |

Files are named and saved in similar fashion as those for naming and saving RGB images.

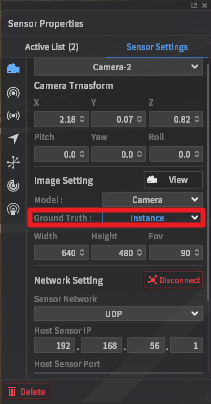

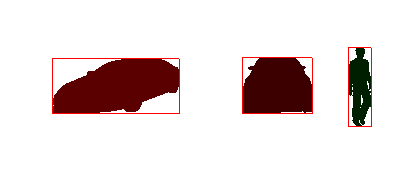

Instance image

Image file format:

.png2D bounding box:

.txt

To save instance label images and 2d bbox, after camera installation, select ‘Instance’ from Ground Truth field from Image View section in Camera Setting panel.

Instance label images are labelled with different pixel values even if they are of the same class, and thus, are differentiated with ID within each class.

2d bbox in txt file follows a similar format as the KITTI data set.

1 : 2d bbox classes

Vehicle

Pedestrian

Object

2 : Truncated(1), output equal to 0.

3 : Occluded(1), output equal to 0.

4 : Alpha(1), output equal to 0.

5-8 : Coordinates of upper left-hand, lower right-hand corners of 2d bbox. x1, y1, x2, y2, respectively.

9-11 : Object dimensions. Output equals to 0 since this field overlaps with point cloud 3d bbox

12-14 : Object location. Output equals to 0 since this field overlaps with point cloud 3d bbox

15 : Yaw angle of object. Output equals to 0 since this field overlaps with point cloud 3d bbox

16 : Relative distance between object and camera

17-19 : Relative velocity of object and camera x, y, z

Files are named and saved in similar fashion as those for naming and saving RGB images.

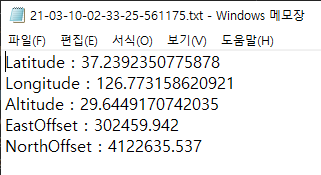

GPS data

File format : txt file

GPS data format inside txt file

1 : Latitude (unit : deg)

2 : Longitude (unit : deg)

3 : Altitude (unit : m)

4 : EastOffset (unit : m)

5 : NorthOffset (unit : m)

Files are saved in folder SaveFile/SensorData/GPS_*. Files are named as time and date at which the files are saved.

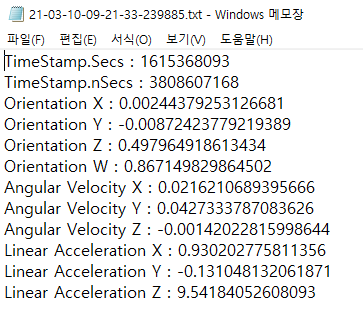

IMU data

File format : txt file

IMU data format in txt file

1-2 : TimeStamp. 1 in 1-second units, 2 in 1-nanosecond units.

3-6 : Orientation X, Y, Z, W. Quaternion angular velocity.

7-9 : Angular Velocity X, Y, Z for X, Y, Z axis components (unit : rad/s)

10-12 : Linear Acceleration X, Y, Z for X, Y, Z axis components (unit : m/s^2)

Files are saved in SaveFile/SensorData/IMU_*. Files are named as time and date at which the files are saved.

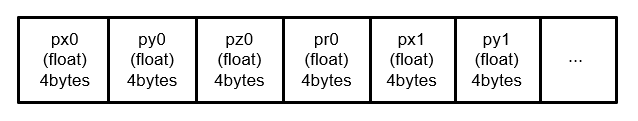

Radar Data

File format: bin file

Radar data, composed of position, velocity, and acceleration with respect to x, y, z axes components are saved as 4-byte data in a 1-D array format in the bin file. The origin is defined as the location at which the Radar is mounted.

1-3 : Position of cluster in x, y, z

4-6 : Velocity components of cluster in x, y, z

7-9 : Acceleration components of cluster in x, y, z

10-12 : Size of cluster in x, y, z

13 : Amplitude

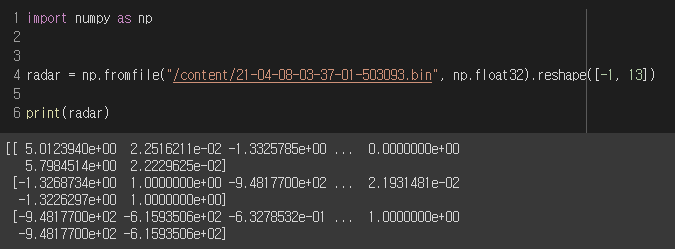

For python users, data can be loaded using fromfile function of numpy as follows.

Files are saved in SaveFile/SensorData/RADAR_*. Saved file names are generated based on time and date at which point cloud is saved.